High Availability & Disaster Recovery

Client Requirements

Understanding the client requirements is crucial before beginning the backup procedure. Depending on their operational requirements, every organization may have distinct backup and recovery objectives. Potential factors are explained below.

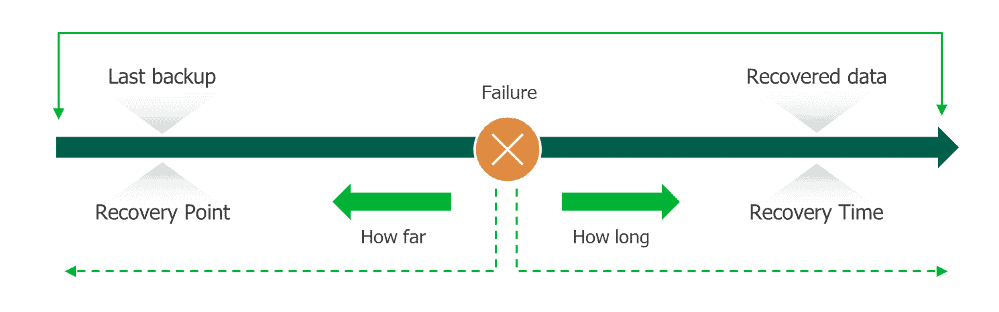

1. Recovery Point Objective (RPO)

The acceptable data loss threshold, specifying how much data the organization is willing to lose in the event of a disaster. It also helps you measure how long it can take between the last data backup and a disaster without seriously damaging your business. RPO is useful for determining how often to perform data backups.

For example, RPOs with very low values, such as less than one minute, might need continuous replication of critical files, databases, and systems. This is the RPO, to have backed up data as current as possible.

Another example is the scenario where you back up your data once a day at midnight (12:00 AM) and there is a disaster at 8:00 AM. In this case, you will lose 8 hours of data. If your RPO is 24 hours or more, you’re in good shape. But if your RPO is, say, four hours, you’re not.

2. Recovery Time Objective (RTO)

The target time within which the system should be recovered and operational after a failure.

For example, if you set your RTO as 2 hours, then you should be able to continue normal business operations within this timeframe in case of any disaster. If during real-life disaster recovery, you go over the given time frame, you should either reconsider the RTO calculations or update your disaster recovery plan and procedures.

To calculate RTO, we need to consider these factors:

- The cost per hour of outage.

- The importance and priority of individual systems.

- Steps required to recover from a disaster including restoration of individual components and processes.

- Available budget and resources.

The following figure depicts the RPO and its relationship to the RTO.

Image taken from Global Data Vault

3. Storage capacity

Determine the storage space required for backups based on the organization’s data growth rate and retention policy.

Business Use Case

Physical backups using pg_basebackup are suitable for various scenarios, including:

- Full system recovery after a catastrophic failure, such as hardware failure or data corruption.

- Migrating a PostgreSQL database to a different server or platform (base backup doesn’t provide major version migrations)

- Setting up a standby or replica server for high availability or load balancing purposes.

- Point in time recovery

Backup Space Complexity

The backup space required depends on several factors, such as:

- The size of the PostgreSQL instance being backed up (as it includes all databases inside a single instance).

- The frequency of backups and retention policy.

- The compression method used during backup.

- It is crucial to regularly monitor the backup space usage to ensure it remains within acceptable limits and to plan for additional storage capacity as needed.

Recovering from pg_basebackup

In the event of a disaster, a physical backup created using pg_basebackup can be used to recover the PostgreSQL database. The recovery process typically involves the following steps:

- Stop the PostgreSQL service on the target server.

- Remove or rename the existing data directory on the target server.

- Use pg_basebackup to restore the backup from the source server to the target server.

- Start the PostgreSQL service on the target server.

Point-in-Time Recovery (PITR)

Point-in-Time Recovery (PITR) allows you to restore a PostgreSQL database to a specific point in time, using a combination of physical backups and archived WAL (Write-Ahead Logs). PITR is useful in scenarios where you need to recover from data loss or accidental changes.

Requirements

To perform a point-in-time recovery in PostgreSQL, the requirements are as follows:

– wal_level must be set to replica. Minimal mode doesn’t support PITR.

– archive_mode must be set to on

– archive_command must be set in postgresql.conf which archives WAL logs

– Full instance backup using pg_basebackup

1: Ensure that the PostgreSQL server is configured for archive mode and that the archive command is set inside postgresql.conf file.

For example

archive_command='cp %p /mnt/server/archivedir/%f'

where

%p is the absolute path of WAL (mostly pg_wal folder).

/mnt/server/archivedir/ is the archiving directory.

%f is a unique file name that will be created on the above path.

2: Take a full instance backup using pg_basebackup. Make sure to use -X stream method to fetch wals for consistent backups.

For example

/usr/pgsql-12/bin/pg_basebackup -D /var/lib/pgsql/12/basebackup --checkpoint=fast -Xs -P

where

-D is the backup directory

–checkpoint=fast will issue an immediate checkpoint on the PostgreSQL server which will flush all dirty pages from memory to disk

-Xs is for streaming wal during backup

-P is for the progress report

Recovery Steps

1: Let’s take a scenario where somebody deleted a critical table at 2023-06-14 01:00:00 and it was identified with proper logging. Now we want to restore the system state to before the table was deleted. We will stop PostgreSQL services, take a snapshot or move the current data directory to another secure place, and restore the last successful base backup inside the same directory.

2: After copying, we’ll configure restore_command and recovery_target_time inside postgresql.conf file

For example

restore_command = 'cp /mnt/server/archivedir/%f %p'

recovery_target_time = '2023-06-14 12:59:59.319298'

3: After setting the above parameters, we will create a recovery.signal (applies to PG12 and above) file inside the main data directory. When PostgreSQL discovers recovery.signal file during startup, it starts up in a special mode called “recovery mode”. When in recovery mode, client connections are refused. Postgres fetches WAL files from archives and applies them until the recovery target (in this case, changes up to the specified timestamp) is achieved. Make sure recovery.signal file is empty.

for example:

touch /var/lib/pgsql/12/data/recovery.signal

4: Start the PostgreSQL server and monitor logs. It will show that it is recovering from archives.

5: After reaching a consistent state, we can verify our tables and data as the database is in read-only mode initially. For turning it into read/write mode, we need to run the following command inside the PostgreSQL session:

select pg_wal_replay_resume();

This will increment the current timeline by 1 and the database will be available for writes.

6: After verification, make sure to revert the restore c

ommand/recovery parameters and restart the server.

Conclusion

In a PostgreSQL context, implementing a reliable backup strategy is essential for ensuring data integrity and recovering from calamities. Organizations can guarantee the availability and dependability of their PostgreSQL databases by utilizing pg_basebackup for physical backups and comprehending the procedures for recovery and point-in-time recovery. Don’t forget to adjust the backup plan to your unique needs and to test the recovery procedure frequently to confirm its efficacy.

FAQS

Q1: What are the ideal backup procedures for a production environment?

- Regular data backups should be done, albeit not all types of data should be backed up at the same time. Using continuous archiving, some data may be continuously backed up. Other backups might be done on a regular basis—daily, at night, or once a week.

- Periodic data backup checks should be carried out—at the very least, every six months or a year. Data backup testing may be performed more frequently depending on the size and resources of the organization.

- Keep a minimum of three backup copies.

- Setting up remote backups is a crucial component of your backup strategy. Although having backups elsewhere is desirable, they should at the very least be on a different system.

- Encrypting the files offers extra data protection in addition to the safety of backup sites (such as the cloud or physical).

- Documenting backup procedures should be done in detail. These should include an explanation of the objectives and strategies, specifications of the tools and procedures employed, the assignments of duties, the timing of backups, and retention schedules.

- Retention periods for data backups should be defined for data governance and storage costs. Backup schedules should correspond to retention schedules. For example, retain hourly and daily backups for a week, weekly backups for a month, and monthly backups for a few months or even years.

Q2: Why do we use -X stream method with pg_basebackup utility?

It is advised to use –wal-method=stream with pg_basebackup to ensure that the backup has enough wal files to at least approach a consistent state. It launches a second replication connection with the database to retrieve WAL generated during the backup. Now the backup has everything it needs to survive without WAL archive, which is a benefit.

Q3: How can we create a delayed replica? Is there any benefit of using a delayed replica in production systems?

Once you’ve configured streaming replication between primary and standby, you need to add the following parameter to the standby’s postgresql.conf file, restart the PostgreSQL service and then it will automatically delay a standby.

recovery_min_apply_delay = '1h'

High level steps for recovering from a delayed standby are the same as performing a PITR operation:

- Stop standby

- Set recovery parameter just like PITR inside postgresql.conf

- Create recovery.signal file

- Start the PostgreSQL server and monitor logs.

Example:

Let’s say we take physical backups at 12:00 am every day along with continuous archiving. Now someone dropped a table or modified data incorrectly inside a table at 11:00 pm. If we start recovering using the standard PITR method, it can take a lot of time as we have to upload the backup and replay 11 hours of WAL logs. Whereas if we had a delayed standby with recovery_min_apply_delay set to 1 hour, we can apply PITR on this standby and promote it as it has to apply only 1 hour of WAL logs.

After this, it’s up to us – we can either promote this standby into primary or export the important data to the primary instance.

Q4: Let’s say a disaster occurred at 12:00 am and we recovered till 11:59:59.9999 using PITR. Now at 1:00 pm, we realized that we needed to recover till 11:55:00. How can we re-run PITR?

This scenario includes the concept of timelines in PostgreSQL. A new timeline is created each time a PITR operation is carried out or a standby is promoted in order to distinguish the WAL records produced following that recovery. In order to prevent a new timeline from overwriting WAL data created by earlier timelines, the timeline ID number is included in the WAL segment file names. In reality, numerous separate timelines can be archived. This is really helpful when you need to attempt multiple point-in-time recoveries by trial and error until you get to the optimal point.

Let’s say after the first PITR operation, our timeline is switched to 6 (old timeline id was 5). Now we need to recover again till 11:55:00 in timeline 5. The contents of recovery parameters inside postgresql.conf will be as follows:

restore_command = 'cp /mnt/server/archivedir/%f %p' recovery_target_time = '2023-06-14 11:55:00' recovery_target_timeline = 5

This will help us in reaching our desired point and once recovered, it will switch to a new timeline 7.

Timelines concept is rarely explained and not easy to understand. Make sure to read below referenced links.

References

- PostgreSQL Wiki: https://wiki.postgresql.org/

- PostgreSQL Tutorial: https://www.postgresqltutorial.com/

- pg_basebackup Documentation: https://www.postgresql.org/docs/current/app-pgbasebackup.html

- Point-in-Time Recovery in PostgreSQL: https://www.postgresql.org/docs/current/continuous-archiving.html#PITR

- Delayed Replication in PostgreSQL: https://www.percona.com/blog/faster-point-in-time-recovery-pitr-postgresql-using-delayed-standby

- PostgreSQL Timeline Concept: https://www.alibabacloud.com/forum/read-291

- PostgreSQL Timeline Concept-2: https://www.2ndquadrant.com/en/blog/evolution-of-fault-tolerance-in-postgresql-time-travel/