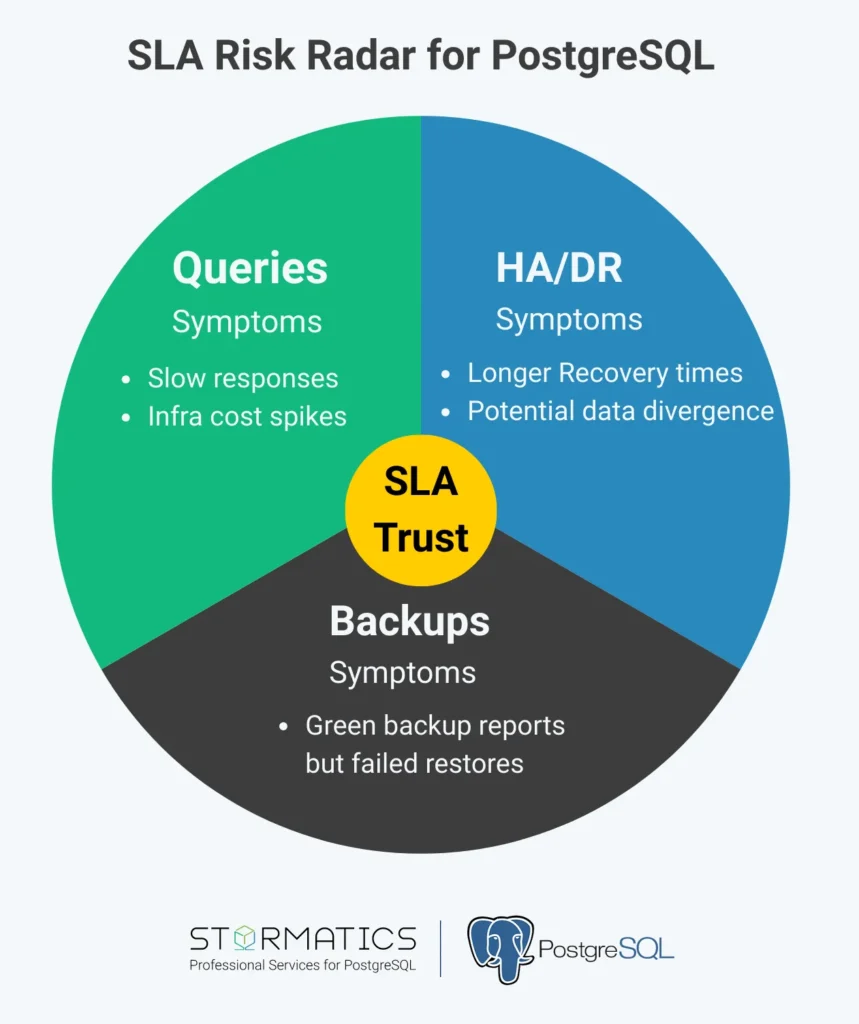

SLAs feel reassuring when signed—but their substance lies in what happens behind the scenes. Often, the most damaging breaches don’t stem from cloud outages or server failures, but from invisible issues hidden in how PostgreSQL was initially set up and configured. Increasingly sluggish queries, split-brain scenarios, silent backup failures, any of these can suddenly explode into customer-facing crises.

1. Slow Queries: The Sneaky SLA Saboteur

The Hidden Cost of Delayed Queries

A seemingly minor tuning oversight, like a missing index or outdated statistics, can turn a 200 ms query into a 10-second slog. It might not seem urgent initially, but as concurrency increases, cascading delays build up.

A Slow Query Accelerated 1000×

In one case study, an engineer faced a painfully slow query that scanned 50 million rows through a sequential scan—even though it was a simple query filtering on two columns (col_1, col_2) and selecting by id. After creating an index using those columns plus an INCLUDE (id) clause, the query performance improved dramatically: what had taken seconds dropped to just milliseconds, representing up to a 1,000× improvement in the worst-case runtime. [Ref: Learnings from a slow query analysis in PostgreSQL]

This shows how even a simple query, if not indexed properly, can pose an SLA risk as data volume increases.

PostgreSQL Mechanics: How This Happens

- Inefficient query plans: Without proper indexing, the query planner is forced into slow sequential scans on large tables.

- Index-only scans: Leveraging PostgreSQL’s INCLUDE clause to add ‘id’ to the index enables index-only scans—eliminating the need to fetch table rows altogether.

- pg_stat_statements and EXPLAIN (ANALYZE): Essential tools for identifying slow queries and understanding how the database executes them in real time.

- Auto-vacuum tuning and analysis: Maintaining up-to-date statistics helps the query planner make smarter decisions and avoid inefficient execution paths.

Executive Takeaway

A query that drops from 10 seconds to 10 milliseconds directly reduces customer-facing latency, prevents support escalations, and avoids the need for costly hardware upgrades. It means the database can absorb growth. Faster queries equal lower infrastructure spend and higher SLA confidence.

Pro Tip

Ask your team: Which queries currently dominate execution time, and what is their impact on customer latency?

2. High Availability: When “It Worked in Dev” Fails in Production

The Allure and Risk of Untested HA

HA plans are comforting on paper—but if never tested under real load or failure patterns, they’re just assumptions masquerading as resilience.

Real-World SLA Impact

A developer survey underscores that many teams lacked confidence in rapid recovery, only 21% felt very confident. Replication or failover failures naturally escalated into SLA violations. (Ref: The True Cost of Slow Postgres Restores)

Case in Practice (An Industry Perspective)

A common PostgreSQL failure scenario: a primary node fails and no automated failover is in place, or worse, a split-brain situation occurs because the original primary still thinks it’s primary. The result: critical data divergence and layered recovery complexity. (Ref: The Most Common PostgreSQL Failure Scenarios)

Postgres-specific Mechanics to Build Trustworthy HA

– Replication slots & WAL archiving: Ensure they’re constantly monitored to prevent data loss or lag.

– Failover management: Automate safe failover workflows—but more importantly, test them.

– Simulate failovers: Regular drills yield insights—not surprises. Measure RTO (recovery time objective) and RPO (recovery point objective) against what your SLA promises.

Executive checkpoint questions

- Have we measured actual failover time against our SLA RTO commitments?

- Do we have alerts on replication slot bloat and WAL lag?

- When was the last failover drill run under production-like load, and who signed off on it?

These are not DBA tasks alone—these are the questions tech leaders should be asking to validate SLA resilience.

Pro Tip

Ask your team: What is our measured RTO/RPO, and how does it compare to what we’ve committed to customers?

3. Silent Backup Failures: “Looks Fine” Isn’t Recovery-Ready

The Dangers Lurking in Backup Jobs

Backups labeled as “successful” can be deceiving. Corrupt files, disk problems, or faulty automation can conceal issues until you’re restoring.

Anecdote: Cron Scripts Gone Wrong

As recounted in a real-world narrative:

“Not realizing their cron backups are misconfigured which leads to WAL never being expired by pgBackRest which leads to archive_command failing which leads to primary running out of disk space which leads to Patroni failover which leads to the replicas running out of disk space too.” (Ref: How Postgres is Misused and Abused in the Wild)

That chain of events shows how a backup misconfiguration snowballs quickly into a full-blown outage.

Wide-Scale Data Recovery Failures

Many teams face hours of downtime—and with 26% incurring SLA penalties, relying on “the backup ran” is no longer a defensible approach. Following best practices can prevent something like this from happening: The Night I Didn’t Sleep: How Jira’s SLA Function Crashed Our PostgreSQL Cluster.

PostgreSQL Backup Mechanics to Train Eyes On Risks

– pgBackRest, Barman, or logical backups with pg_dump must be paired with integrity validation.

– Use checksum validation and tools like pg_verify_checksums.

– Automated alerts for failed integrity checks.

– Periodic test restores—at least quarterly—to guarantee backups work when they’re needed.

Pro Tip

Ask your team: When was the last successful restore drill, and how long did it take?

Executive blindspots in PostgreSQL SLAs

- Latency hidden in averages: Teams often report mean response times, but SLA violations usually occur in the p95 or p99 tail latency that customers actually feel.

- Backups reported as “successful” but never restored: A green checkmark does not equal a working recovery.

- HA tested in dev, not under prod load: Failover that works in staging may fail under concurrency and scale.

- Shallow Postgres expertise: When generalist teams manage Postgres without specialists, risks remain invisible until they surface during escalations.

You don’t want to be learning about these failures during a customer call. Proactivity is the path to trust.

Building SLA-Aware PostgreSQL Operations

Area | Recommendation |

Performance Monitoring | Track query latency (pg_stat_statements/pg_stat_activity), deadlocks, and bad plans. Use EXPLAIN regularly. |

HA Readiness | Automate and rehearse failovers. Monitor WAL lag and replication health. Define RTO/RPO in the SLA context. |

Backup Assurance | Integrate checksum verification and alerting. Perform real restore drills. Treat ‘restore success’ as the SLA metric, not just ‘backup success’. |

Key tools:

- pg_stat_activity / pg_stat_replication / pg_stat_statements

- pgBackRest / Barman

- Patroni or pgpool-II

- Metrics and alerts to detect decay before impact

Final Thoughts

More than mere legal commitments, SLAs are a pact of trust. In environments powered by Postgres, the fragility often hides in plain sight: a slow query, an untested failover, a silent backup failure. The difference between “SLA met” and “SLA broken” is often what you can’t see.