PostgreSQL High Availability & Disaster Recovery Solutions

When it is critical, you can count on us!

Downtime is not an option for mission-critical PostgreSQL databases. Even a few minutes of database unavailability can erode customer trust, disrupt operations, and result in lost revenue.

At Stormatics, we design tailored High Availability and Disaster Recovery solutions for PostgreSQL, ensuring 99.99% uptime and auto-failover in case of disruptions. Using techniques like clustering, streaming replication, and automated failover, our expert team safeguards your data and minimizes downtime.

Schedule a consultation today to see how we can help you achieve uninterrupted database operations.

5 good reasons to invest in High Availability & Disaster Recovery

Customer Success Story

99.99% Availability for PostgreSQL on MS Windows

The ecosystem supporting PostgreSQL is quite limited on Windows and that was the issue facing a critical government organization in the Middle East when they approached us.

This organization had moved from SQL Server to PostgreSQL and attempted to solve the high availability problem like they had done for their previous database – relying on the OS layer. This goes against best practices of setting up PostgreSQL clusters and is also highly unreliable – something the customer experienced firsthand when their database kept going down in production.

This was a challenging project, something that’s right up our alley. We enjoy solving the hard problems!

How we Ensure 99.99% Availability for PostgreSQL

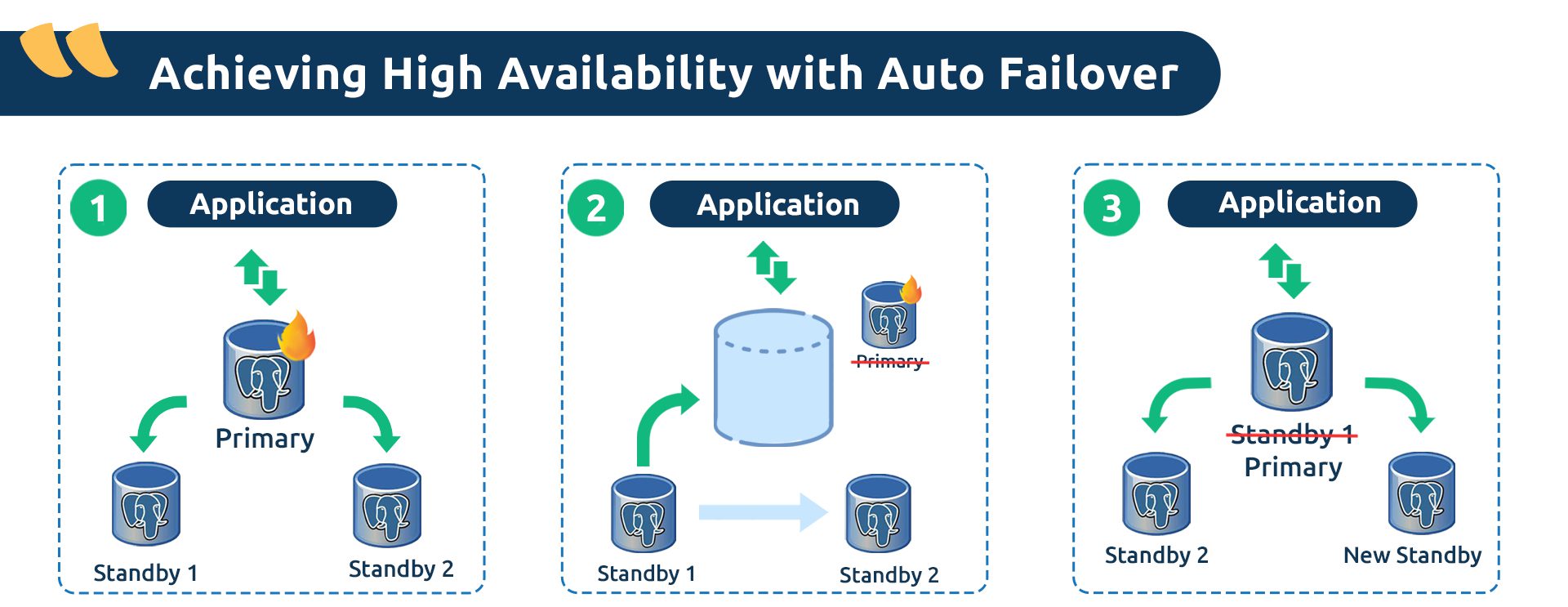

Our signature approach to PostgreSQL High Availability and Disaster Recovery is built on a multi-node architecture with replication and automatic failover. This ensures your data is always secure and accessible when you need it. The entire process is fully automated, eliminating the need for human intervention and reducing the risk of errors during critical moments.

We also design disaster recovery strategies customized to your organization’s Recovery Time Objective (RTO) and Recovery Point Objective (RPO) needs. These strategies help minimize data loss and speed up recovery in case of unexpected disruptions.

Frequently Asked Questions (FAQs)

Q. What does high availability mean?

High availability refers to the ability of a database system, such as PostgreSQL, to remain accessible and operational with minimal downtime. It is achieved by implementing redundancy, automated failover, and monitoring systems that ensure seamless service, even during failures.

Q. Does PostgreSQL have high availability?

Yes, PostgreSQL supports high availability by using clustering, replication, and failover mechanisms. These configurations ensure that PostgreSQL databases remain accessible, even during server failures, making it a reliable choice for critical applications.

Q. How to set up high availability in Postgres?

To set up high availability in PostgreSQL, configure primary-secondary replication using tools like pgpool-II, Patroni, or repmgr. Implement synchronous or asynchronous replication to sync data between the primary and standby servers, ensuring seamless failover in case of primary server failure.

Q. How do you get high availability in a database?

You can achieve high availability in databases like PostgreSQL by setting up replication, clustering, and automated failover. Use monitoring tools to detect failures and trigger failover to standby servers, ensuring continuous service and minimal downtime.

Q. Is PostgreSQL suitable for big data with high availability requirements?

Yes, PostgreSQL is well-suited for highly available big data solutions. It supports large datasets and offers features like table partitioning, replication, and clustering to handle big data workloads while maintaining high availability.

Q. Do big companies use PostgreSQL with high availability?

Many large enterprises, including those in financial services, healthcare, and technology sectors, use PostgreSQL with high availability setups. It is a cost-effective, reliable, and scalable option that supports critical applications and ensures continuous database operations.

Q. Does PostgreSQL have automatic failover?

Yes, PostgreSQL supports automatic failover when integrated with tools like Patroni, repmgr, or pgpool-II. These tools monitor the primary server’s health and automatically promote a standby server to primary if a failure occurs, reducing downtime.

Q. What is the best practice for creating a highly available PostgreSQL?

Best practices include using asynchronous replication, setting up load balancers for traffic distribution, and implementing automated failover. Regular failover testing, monitoring, and maintaining sufficient server resources are also essential to ensure optimal performance and high availability.

Q. How do you create a highly available cluster?

To create a highly available PostgreSQL cluster, deploy multiple database instances, set up replication, and configure automatic failover using tools like Patroni or repmgr. Add load balancers to distribute traffic evenly and perform regular testing to ensure the cluster operates smoothly.

Q. What is the difference between hot standby and warm standby in PostgreSQL?

In PostgreSQL, a hot standby allows read-only queries on the standby server, helping distribute read loads. A warm standby, however, is purely for failover and does not accept queries until it becomes the primary server.

Q. What are the three main high availability strategies?

The three main high availability strategies for PostgreSQL are:

- Replication: Copies data between servers to ensure redundancy.

- Clustering: Combines multiple nodes into a single system for increased resilience.

- Automated Failover: Switches to standby servers automatically during failures.

Q. How do you ensure database availability?

You can ensure database availability by introducing redundancy. Setting up replication, automated failover, and load balancing are some of the steps you can take. Regular health checks, monitoring tools, and backups are also vital to maintain a highly available PostgreSQL environment.

Q. What is Disaster Recovery in PostgreSQL?

Disaster Recovery (DR) in PostgreSQL refers to restoring database services after major failures. It involves using backups, point-in-time recovery (PITR), and replication to minimize data loss and ensure that PostgreSQL databases are restored quickly.

Q. What is the difference between Backup and Disaster Recovery?

Backup involves creating copies of database data to restore it if needed, while Disaster Recovery (DR) is a broader strategy that includes restoring services, data, and operations after a catastrophic failure. DR in PostgreSQL typically uses backups and replication in combination to achieve quick recovery.

Q. How to recover a corrupted table in PostgreSQL?

Recover a corrupted table in PostgreSQL by restoring from a recent backup or using point-in-time recovery (PITR) if WAL (Write-Ahead Logging) is configured appropriately. Regular backups and WAL archiving are essential for recovering corrupted data.

Q. How do you measure high availability?

High availability is measured in ‘Nines of Availability’.

- 90% (one nine) – 36.53 days of downtime per year

- 99% (two nines) – 3.65 days of downtime per year

- 99.9% (three nines) – 8.77 hours of downtime per year

- 99.99% (four nines) – 52.60 minutes of downtime per year

- 99.999% (five nines) – 5.26 minutes of downtime per year

Q. How to dump and restore PostgreSQL?

Use `pg_dump` to create a logical backup of your PostgreSQL database, saving it as a dump file. To restore, use `pg_restore` for custom-format backups or `psql` for plain-format backups. This process is crucial for disaster recovery.

Q. How to restore dump file in PostgreSQL using pgAdmin?

In pgAdmin, right-click on the target database, select ‘Restore’, choose the dump file, and configure the options. Click ‘Restore’ to initiate the process, making it easy to recover databases from backups in case of failure.